Building InPhotos Part 2, Adding Face Recognition and integrating them with the search

Now that we can search through the photos using natural language, it would help a lot, if I could search based on people as well. That’s exactly what I did the previous week.

Just a note, this article is part of a series and you can find the previous one here

How did it all go down?

Again it started with a Google search but I found quite a deep rabbit hole. Face recognition has several steps. First, we have to detect faces and then align them. Once, we have that we could send it to a neural network to generate embeddings. These embeddings can be used in several ways, they can either be matched with an existing database of faces, or you could create embeddings for many faces and cluster them.

I tried out a few methods to detect faces. The first one I tried was the traditional Haar cascade classifier, however, the results weren’t satisfactory.

Then I read about recent advancements in the field of face detection, I came across two papers

- Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks - It introduces MTCNN

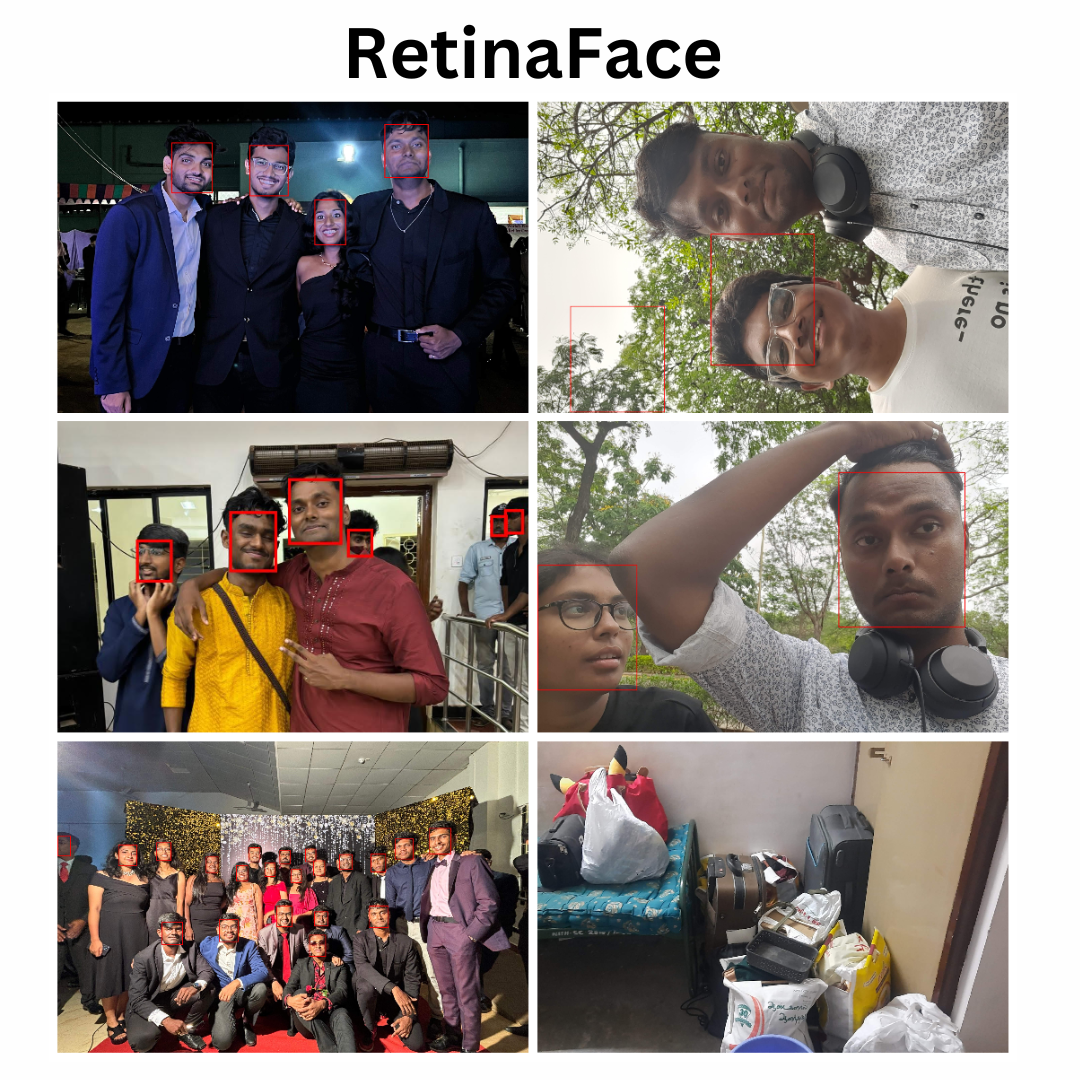

- RetinaFace - Single Stage Dense Face Localisation in the Wild - It introduces the RetinaFace Model

Below you can see a comparison of the results

MTCNN results aren’t perfect when compared to RetinaFace. However, RetinaFace takes 120 seconds to process 64 photos in my system’s CPU, whereas MTCNN takes 73 seconds to process the same in the CPU.

(Note: I didn’t consider using my GPU, as I want this to be accessible and fast in systems without GPU)

Reading through the papers and their implementation was a rewarding experience, as I learned quite a lot from them. We will cover those models in depth separately in another blog post.

If you are interested, you could have a look at these

- Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks - https://arxiv.org/pdf/1604.02878v1

- RetinaFace Paper - https://arxiv.org/abs/1905.00641

- A tutorial showcasing the various parts of the MTCNN algorithm - https://github.com/xuexingyu24/MTCNN_Tutorial/tree/master

- Retinaface Implementation - https://github.com/biubug6/Pytorch_Retinaface

MTCNN also produces landmarks, which can be used for alignment.

Generation of Facial Embeddings

Now for the generation of facial embeddings, I could have just used the usual VGGNet or ResNet. However, I felt that they were quite heavy for my application. I wanted a model with much fewer parameters. Thus I went ahead with edgeface.

They proposed modifications to the edgeNeXt architecture and have introduced a layer called Low-Rank Linear Module (LoRaLin) which is a way to save up computation used in fully connected networks.

For more info, you can have a look here

- Edgeface Paper - https://arxiv.org/abs/2307.0183

- Library - https://github.com/otroshi/edgeface

So now what are we going to do with these embeddings?

I was pretty confused here and was roaming in the forest pretty aimlessly. I am not going to complain much as I learned a lot about clustering from reading papers such as “[Clustering Millions of Faces By Identity](Clustering Millions of Faces By Identity)“. However, in the end, I pretty much did something simple which worked quite well.

As I add an image, the program identifies the face and generates the embedding. It checks with existing embeddings and then calculates Cosine Similarity, if the cosine distance is less than a threshold, they belong to the same person, else a new person is created. Every time, we encounter an existing person in the db, we average the vector. This method is also called Agglomerative Clustering.

This worked pretty well and was pretty quick as well, so I just left it as it is.

Integrating it with the current version of InPhotos

Storing the Peoples’ Faces Embeddings and their Names

I created another entity in Objectbox and just stored the embedding in that. However, I came across a bad note in Objectbox Documentation,

So now connecting the people with the photos has become much harder. I found a workaround but that’s all it is. It costs us performance when we are querying for our results but I think this is a problem for another day.

I have a list of all the People IDs along with the image, so when I do get the results, I can check whether that person is present in the results of the query. I will send the images only to those for whom the check seems positive.

This method has several flaws and that is one of the things I will be working on, next week. For each person, I store the latest 3 images of their face to represent them to the user.

Integrating the search with the names

Assuming we have the names of the people in the database, how are we going to use it in our search? Given a search query, we first need to identify the names of the people in the text. This task is called Named Entity Recognition. I tried out a few libraries such as nltk, spacy, and used a fine-tuned BERT model. However, all of them had a fatal flaw, and that is that they only work if the sentence is correctly capitalized. To achieve this, I used a library called truecase, which is based on a paper named ”tRuEcasIng” ( Ngl, love this name :) )

Once I got that done, I just used nltk to get the names out of the query. Then it is just a get operation in the database to get the ID of the person.

For More Information,

- NLTK Tutorial - https://realpython.com/nltk-nlp-python/#using-named-entity-recognition-ner

- Recent trends in NER - https://arxiv.org/abs/2101.11420

Adding the names to the databases

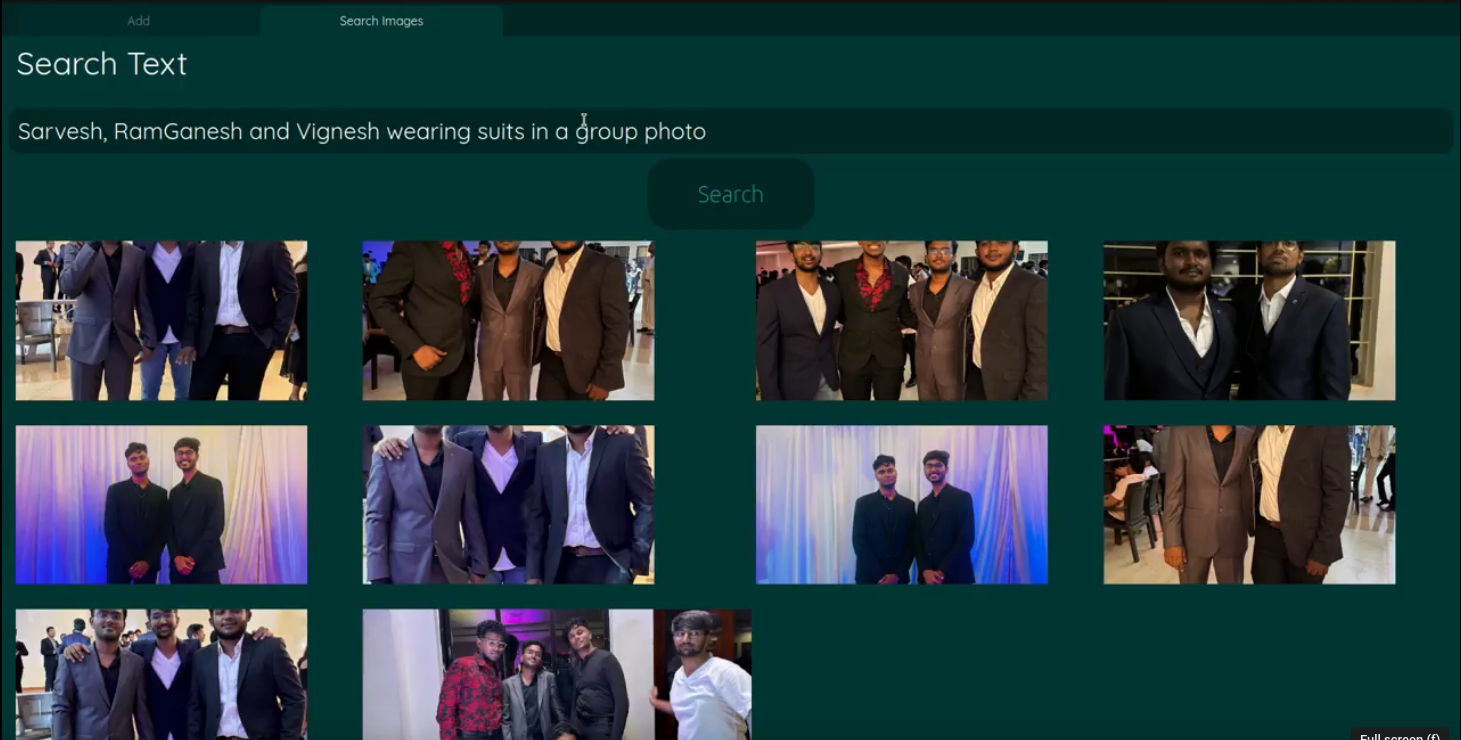

As we add images, InPhotos will cluster them based on faces. In the add section, it will show 3 recent images of the person and ask you to give them a name, and once done, you could just search with the names.

I have also updated the UI a bit and learned more about PyQt6 along the way but I still have a long way to go before I get comfortable with PyQt6.

So What’s Next

There are a lot of issues to solve and here are a few of them:

- The Application Size is quite large

- Relational Support in the Database

- More Intuitive UI

- Package the application

- Look into Auto Updaters

Let’s see how much of these I can tackle in the following week.