Building InPhotos, A Local Semantic Search App for Photos

So, for season 5 of Nights & Weekends, I thought I would go ahead with the following idea:-

A search engine for all your photos, which you can download and use without the hassle of uploading your photos or the convenience of the internet. Everything happens on your system.

As of now, I built a basic prototype of the product.

How did it all go down?

As with every other project, it started with a Google search. I searched for how to perform a search for an image. After reading up a few articles, I came across OpenAI’s CLIP Model.

A brief overview of OpenAI’s CLIP

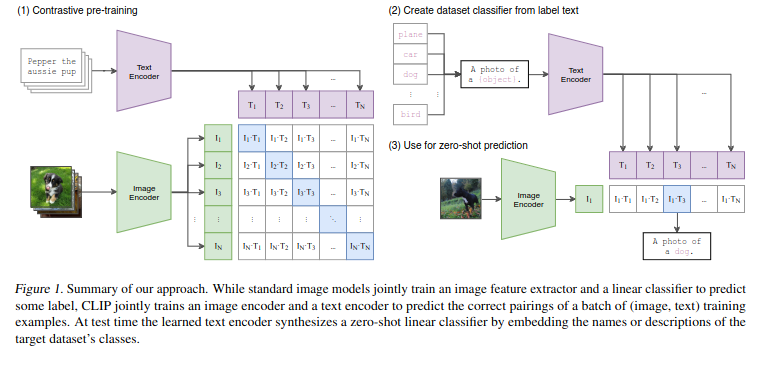

(Source: https://arxiv.org/abs/2103.00020 - Learning Transferable Visual Models From Natural Language Supervision)

(Source: https://arxiv.org/abs/2103.00020 - Learning Transferable Visual Models From Natural Language Supervision)

Given a batch of N (image, text) pairs. CLIP is trained to predict which of the pairs are true. It learns an embedding space by jointly training an image and text encoder, to maximize the cosine similarity of the true pairs and minimize the value for others. For optimizing the model, it uses a symmetric cross-entropy loss, over the similarity scores.

The Text Encoder follows a transformer architecture, while the image encoder can follow a Resnet or a Vision Transformer Architecture. We use a Vision Transformer in our Application.

Fortunately, the weights are provided for use and the model is also available through the transformers module of the hugging face library.

To learn more about CLIP, you can take a look at the following resources:

- Paper - https://arxiv.org/abs/2103.00020 - Learning Transferable Visual Models From Natural Language Supervision

- OpenAI Blog - https://openai.com/index/clip/

- An Explanation of the Paper - https://www.youtube.com/watch?v=T9XSU0pKX2E&t=358s

As we have an idea of how to achieve our core functionality, time to start developing our application.

Workflow

Roughly, our workflow will be as follows

- User specifies the folder path, in which all their photos are present

- Generate embeddings for these images using OpenAI CLIP

- Store them in an on-device vector store.

- Whenever Users input a search query

- Generate Embeddings for their search

- Find the most similar embeddings in the DB

- Return the image corresponding to that embedding

Storing the embeddings and effectively retrieving them

I had several options for databases. I could go with Pinecone, however, then we wouldn’t have offline usage and the user’s data would be sent through the internet which some people may not like. Following that, I thought of using Postgres with Pgvector, however this seemed to be an overkill for my purposes. In the end, I settled on Objectbox. It is pretty simple and lightweight.

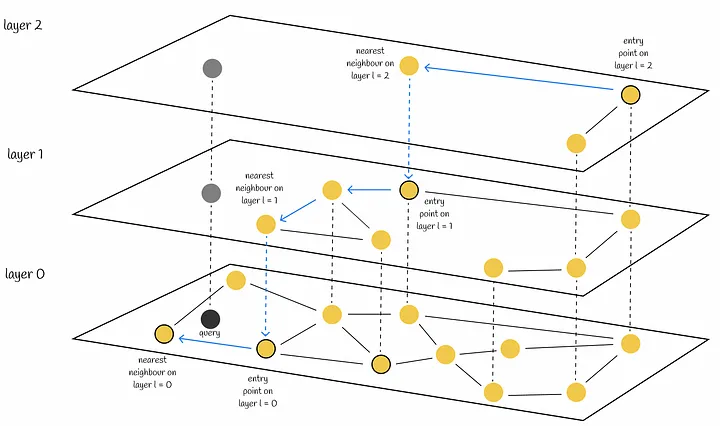

Once I had that, I designed a simple entity for photos which has an ID field, a path to the file, and then the corresponding embedding. Databases use indexes to effectively retrieve stuff. Thus, our embeddings need to be indexed for fast query results. Objectbox uses an algorithm called Hierarchical Navigable Small World(HNSW) to store the embeddings. It is based on two important concepts, skip-lists and navigable small worlds.

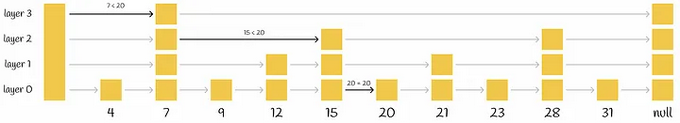

Skip Lists are layered linked lists, which provide O(logN) time complexity on average for inserting and searching elements.

Navigable Small Worlds is a graph basically with polylogarithmic search complexity. Combining these, we have HNSW.

(Source - https://towardsdatascience.com/similarity-search-part-4-hierarchical-navigable-small-world-hnsw-2aad4fe87d37)

(Source - https://towardsdatascience.com/similarity-search-part-4-hierarchical-navigable-small-world-hnsw-2aad4fe87d37)

When we make a query,

- We go to the closest neighbor in the current layer

- Then we go to the deeper layer, which has more nodes, and then we use that to go closer

For the distance measure, I went with the standard choice of cosine similarity.

To learn more refer to these.

- Objectbox - https://docs.objectbox.io/getting-started

- Skip Lists - https://www.geeksforgeeks.org/skip-list/

- HNSW - https://towardsdatascience.com/similarity-search-part-4-hierarchical-navigable-small-world-hnsw-2aad4fe87d and https://www.pinecone.io/learn/series/faiss/hnsw/

UI and Integration

Now that we are done with the database, time to work on the user interface. I want this to be a desktop application. Initially, I thought I would use electron-like frameworks like eel, but then that would be too heavy because our application will also be running an encoder on-device. It was also a hassle to show images in the interface.

(Note: to show images in eel, we have to send the images as base 64 encoded strings to the frontend and use it in the src field of the image tag)

Thus, I decided to drop the electron-like idea and stuck with PyQt6. It is a Python framework based on Qt. After coding the UI and linking everything together, we have our first version of InPhotos. I have planned a lot of features and will be shown posting a roadmap in my handles.

You can find the GitHub repo here. I will soon upload the releases for the first version of InPhotos. Be on the lookout for that. If you want to have a chat with me, feel free to text me on X and Linkedin, or you could very well send me an email.